Final Exam

Recall the Partition subroutine that we used in both QuickSort and RSelect. Suppose that the following array has just been partitioned around some pivot element:

. Which of these elements could have been the pivot element? (Hint: Check all that apply, there could be more than one possibility!)

ANSWER:

Here is an array of ten integers:

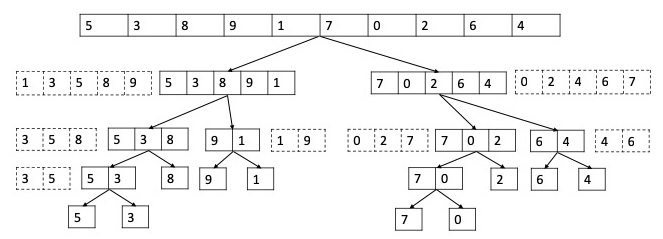

. Suppose we run MergeSort on this array. What is the number in the 7th position of the partially sorted array after the outermost two recursive calls have completed (i.e., just before the very last Merge step)? (When we say “7th” position, we’re counting positions starting at ; for example, the input array has a “0” in its 7th position.)

ANSWER: The recursion tree is shown below, solid boxes represent going down into recursion, dashed boxes represent coming up from recursion. Option 3 is correct.

What is the asymptotic worst-case running time of MergeSort, as a function of the input array length

?

ANSWER: Option 1 is correct.

What is the asymptotic running time of Randomized QuickSort on arrays of length

, in expectation (over the choice of random pivots) and in the worst case, respectively?

[expected] and [worst case] [expected] and [worst case] [expected] and [worst case] [expected] and [worst case]

ANSWER: Option 4 is correct. Worst case is when the pivot is always chosen as the first element,

thus the problem size reduces by at most

Let

and be two increasing functions, defined on the natural numbers, with . Assume that . Is (Multiple answers may be correct, check all that apply.)

- Never

- Yes if

for all sufficiently large - Maybe, maybe not (depends on the functions

and ) - Always

ANSWER: Options 2 and 4 are correct. See Problem Set 1.

Let

be some constant. Consider running the Partition subroutine on an array with no duplicate elements and with the pivot element chosen uniformly at random (as in QuickSort and RSelect). What is the probability that, after partitioning, both subarrays (elements to the left of the pivot, and elements to the right of the pivot) have size at least times that of the original array?

ANSWER: Option 3 is correct. See Probability Questions.

Suppose that a randomized algorithm succeeds (e.g., correctly computes the minimum cut of a graph) with probability

(with ). Let be a small positive number (less than ). How many independent times do you need to run the algorithm to ensure that, with probability at least , at least one trial succeeds?

ANSWER: Suppose we run the algorithm for

Since,

Suppose you are given

sorted arrays, each with n elements, and you want to combine them into a single array of elements. Consider the following approach. Divide the arrays into pairs of arrays, and use the Merge subroutine taught in the MergeSort lectures to combine each pair. Now you are left with sorted arrays, each with elements. Repeat this approach until you have a single sorted array with elements. What is the running time of this procedure, as a function of and ?

ANSWER: The Merge subroutine takes linear time, so the work done at each iteration is given by subproblem size

Since

Running time of Strassen’s matrix multiplication algorithm: Suppose that the running time of an algorithm is governed by the recurrence

. What’s the overall asymptotic running time (i.e., the value of )?

ANSWER: Master Method,

Recall the Master Method and its three parameters

. Which of the following is the best interpretation of , in the context of divide-and-conquer algorithms?

- The rate at which the total work is growing (per level of recursion).

- The rate at which the subproblem size is shrinking (per level of recursion).

- The rate at which the work-per-subproblem is shrinking (per level of recursion).

- The rate at which the number of subproblems is growing (per level of recursion).

ANSWER: Option 3 is correct.

Consider a directed graph

with non-negative edge lengths and two distinct vertices and of . Let denote a shortest path from to in . If we add 10 to the length of every edge in the graph, then: [Check all that apply.]

- If

has only one edge, then definitely remains a shortest path. definitely remains a shortest path. might or might not remain a shortest path (depending on the graph). definitely does not remain a shortest path.

ANSWER: Option 1 is trivially correct.

Option 2 is incorrect, see Problem Set 5 for an example.

Option 3 is correct, see Problem Set 4 for an example.

Option 4 is incorrect because it contradicts option 3.

What is the running time of depth-first search, as a function of

and , if the input graph is represented by an adjacency matrix (i.e., NOT an adjacency list), where as usual and ?

ANSWER: In order to find the adjacent nodes for a node, we need to look through the entire row of size

What is the asymptotic running time of the Insert and Extract-Min operations, respectively, for a heap with

objects?

and and and and

ANSWER: Option 2 is correct. See leature video 12.2 for proofs.

On adding one extra edge to a directed graph

, the number of strongly connected components…?

- …might or might not remain the same (depending on the graph).

- …cannot change.

- …cannot decrease by more than one.

- …cannot decrease

ANSWER: Option 1 is correct, see Problem Set 4 for an example.

Which of the following statements hold? (As usual

and denote the number of vertices and edges, respectively, of a graph.) [Check all that apply.]

- Breadth-first search can be used to compute shortest paths in

time (when every edge has unit length). - Depth-first search can be used to compute a topological ordering of a directed acyclic graph in

time. - Depth-first search can be used to compute the strongly connected components of a directed graph in

time. - Breadth-first search can be used to compute the connected components of an undirected graph in

time.

ANSWER: All of the options are true as explained below:

- If every edge has unit length, ignore the edge lengths, and run BFS from a single source vertex

. Stop when the vertex dequeued is the destination vertex . . - Run DFS, but instead of outputting a vertex as soon as it’s visited, put it on a stack after all of its adjacent vertices have been visited. In other words, do a reverse-postorder traversal.

. - Kosaraju’s algorithm.

. - Kosaraju’s algorithm can also be implemented by BFS as long as the post-order property is preserved. (TBD: implement).

When does a directed graph have a unique topological ordering?

- Whenever it is directed acyclic

- None of the other options

- Whenever it is a complete directed graph

- Whenever it has a unique cycle

ANSWER: 3. From here “a digraph has a unique topological ordering if and only if there is a directed edge between each pair of consecutive vertices in the topological order (i.e., the digraph has a Hamiltonian path).” (Why?)

Suppose you implement the operations Insert and Extract-Min using a sorted array (from biggest to smallest). What is the worst-case running time of Insert and Extract-Min, respectively? (Assume that you have a large enough array to accommodate the Insertions that you face.)

and and and and

ANSWER: See Problem set 5.

Which of the following patterns in a computer program suggests that a heap data structure could provide a significant speed-up (check all that apply)?

- Repeated maximum computations

- None of the other options

- Repeated minimum computations

- Repeated lookups

ANSWER: Options 1 and 3 are what heaps are good for.

Which of the following patterns in a computer program suggests that a hash table could provide a significant speed-up (check all that apply)?

- None of the other options

- Repeated maximum computations

- Repeated lookups

- Repeated minimum computations

ANSWER: Option 3 is what a hash table is good for.

Which of the following statements about Dijkstra’s shortest-path algorithm are true for input graphs that might have some negative edge lengths? [Check all that apply.]

- It is guaranteed to terminate.

- It is guaranteed to correctly compute shortest-path distances (from a given source vertex to all other vertices).

- It may or may not terminate (depending on the graph).

- It may or may not correctly compute shortest-path distances (from a given source vertex to all other vertices), depending on the graph.

ANSWER: Dijkstra’s algorithm is guaranteed to terminate in grapgs that don’t have negative weight cycles; thus, option 1 is correct.

Nonnegativity of the edge lengths was used in the correctness proof for Dijkstra’s algorithm; with negative edge lengths, the algorithm is no longer correct in general. Thus, option 2 is incorrect.

Option 3 is incorrect because it contradicts option 1.

Option 4 is correct.

Leave a comment